Review: The Big Short – Is it wrong to profit from misfortune you’re powerless to prevent?

Featuring Steve Carrell as Angry Guy and Ryan Gosling as Slick Dude.

The Big Short probably shouldn’t exist as a movie. As an explanation of exactly how and why the financial meltdown of 2008 happened, it’s fascinating, and does a reasonable job laying out the series of events. But if you’ve read enough news articles, or listened to some of the great podcasts from This American Life or Planet Money since these events unfolded, it’s not really offering a lot of new info. As a story about a few specific finance guys who saw it coming and took action, it’s compelling, but also packed to the gills with journalism and outright explaining disguised as drama, just to allow the audience to follow along.

What results feels like a mix between a Michael Moore movie (specific agenda and point of view, humorous fourth-wall-breaking style) and the most star-studded, entertaining dramatization to escape the confines of what could have otherwise been a talking-head documentary. Its script makes it fun while its facts make it depressing; it has a stylish tone and voice I enjoyed, but comes off as schizophrenic in what type of movie it wants to be.

But that’s the film as an experience. Strangely, the movie seems only glancingly concerned with the moral questions involved. It clearly takes the stance of “The Big Banks are Evil,” which pretty much every non-rich person agrees with going in. The handful of traders and fund managers who saw the signs early enough to profit from it serve as our gateway into the story, a useful device for all the explaining the film has to do as they figure it all out. But while the movie also paints these people as our “heroes” — we follow their actions, we root for them to succeed — it pays only lip service to the fact that their success comes on the backs of millions of people losing their homes or jobs, and the entire globe suffering a huge financial disaster. There’s a lot of glee at them pulling it all off, only a couple quiet moments of realization at the implications. It’s so interested in using these characters to make a bigger point about “the system”, it brushes the possibly-more-nuanced character question under the rug in the process.

So.

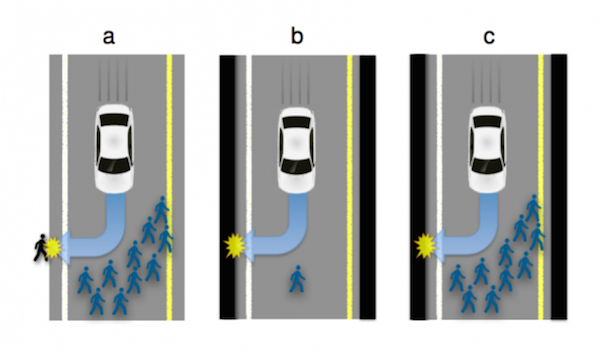

If you know something terrible is going to happen, affecting millions of people, but stopping it is out of your control, is it wrong to take action to personally profit from that tragedy?