Why do we react so differently to autonomous car crashes?

By law, the pre-self-driving stage still requires nerds to sit nervously in driver seats, unsure what to do with their hands but excited to be part of “the future”.

Well, it happened. The first reported autonomous car crash fatality. Testing suspended, people freaking out. But… should they?

If I were a less lazy researcher, I would track down local newspapers calling for an end to automobiles in the 1890’s after those first killed a pedestrian. We could have a good chuckle at how short-sighted those hat-and-vest-wearing luddites were way back when, what with their trying to curb the inevitable advance of American car culture and all.

But let’s be honest (and check Wikipedia): “National Highway Traffic Safety Administration (NHTSA) 2016 data shows 37,461 people were killed in 34,436 motor vehicle crashes, an average of 102 per day.” And that’s now, before all that pesky safety stuff Nader fought for.

So people being killed by (shall we call them “driver-ful”?) cars — though widely considered terrible tragedies, is something we accept as part of the price we pay to have cars at all — something we mostly agree we mostly need.

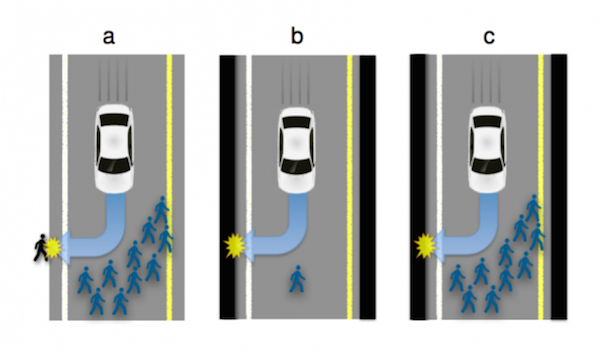

What’s different about crashes without a driver that causes each incident to generate so much interest?

Is that going to change in the coming years as we get used to them being part of life?