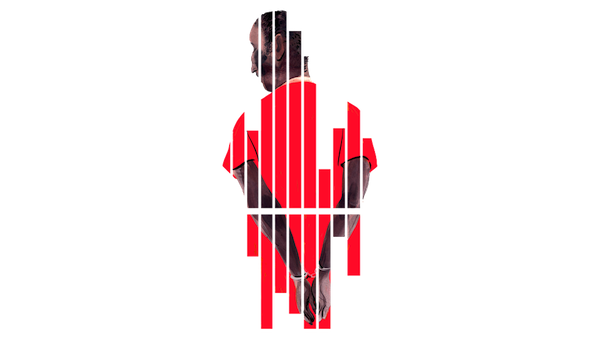

If free will technically doesn’t exist, is anything our fault?

Read this post, have a conversation, or don’t. It’s really not up to you anyway.

Want to get really philosophical? How about having the argument to end (or begin) all arguments: Do we really even have free will?

For context, there is a growing amount of real neuroscience that says… we kind of don’t. Or it would seem that way, based on the fact that our bodies seem to act before our “thoughts” are enacted in our brains. And that’s only one piece of the puzzle. This Atlantic article goes into more of the science:

The contemporary scientific image of human behavior is one of neurons firing, causing other neurons to fire, causing our thoughts and deeds, in an unbroken chain that stretches back to our birth and beyond. In principle, we are therefore completely predictable. If we could understand any individual’s brain architecture and chemistry well enough, we could, in theory, predict that individual’s response to any given stimulus with 100 percent accuracy.

…

Yes, indeed. When asked to take a math test, with cheating made easy, the group primed to see free will as illusory proved more likely to take an illicit peek at the answers. When given an opportunity to steal—to take more money than they were due from an envelope of $1 coins—those whose belief in free will had been undermined pilfered more. On a range of measures, Vohs told me, she and Schooler found that “people who are induced to believe less in free will are more likely to behave immorally.”

…but also makes plain that to a certain degree, the same scientists who are disproving free will are in a way saying, “please do not act as if this truth we’re discovering is actually true.” They know that if we throw the premise of will out the window, life fundamentally changes, not necessarily for the better.

If your life is a series of reactions to the world that aren’t really up to you, can you be blamed for doing wrong?