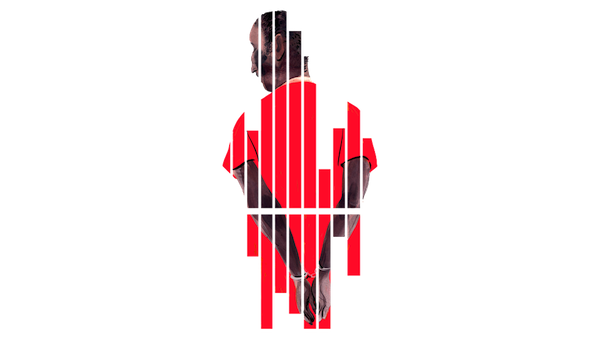

Is it fair to sentence prisoners based on what they might do?

A bit late to this one, but FiveThirtyEight did a piece on using statistical modeling to aid in prison sentencing that will definitely spark debate.

There are more than 60 risk assessment tools in use across the U.S., and they vary widely. But in their simplest form, they are questionnaires — typically filled out by a jail staff member, probation officer or psychologist — that assign points to offenders based on anything from demographic factors to family background to criminal history. The resulting scores are based on statistical probabilities derived from previous offenders’ behavior. A low score designates an offender as “low risk” and could result in lower bail, less prison time or less restrictive probation or parole terms; a high score can lead to tougher sentences or tighter monitoring.

The risk assessment trend is controversial. Critics have raised numerous questions: Is it fair to make decisions in an individual case based on what similar offenders have done in the past? Is it acceptable to use characteristics that might be associated with race or socioeconomic status, such as the criminal record of a person’s parents? And even if states can resolve such philosophical questions, there are also practical ones: What to do about unreliable data? Which of the many available tools — some of them licensed by for-profit companies — should policymakers choose?

It’s almost as if they’re stealing my schtick right there in the article. But there’s an overriding question I think is the most interesting angle.

Is it inherently wrong to sentence people on predicted behavior, even if using this more mathematical model is a net positive for society?

If we get a certain percent of punitive imprisonments “wrong” now under the subjective sentencing of judges, but this system works “better” overall, which is more unfair?