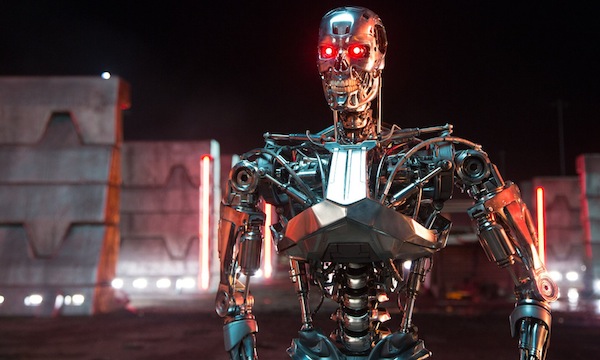

should we ban AI-controlled weapons outright?

And now for the flip side of the robots-replacing-humans coin. Not that I was going for an AI theme this week, but as it turns out, the world’s top AI scientists proposed an international ban on AI-controlled offensive weapons.

The letter, presented at the International Joint Conference on Artificial Intelligence in Buenos Aires, Argentina, was signed by Tesla’s Elon Musk, Apple co-founder Steve Wozniak, Google DeepMind chief executive Demis Hassabis and professor Stephen Hawking along with 1,000 AI and robotics researchers.

The letter states: “AI technology has reached a point where the deployment of [autonomous weapons] is – practically if not legally – feasible within years, not decades, and the stakes are high: autonomous weapons have been described as the third revolution in warfare, after gunpowder and nuclear arms.”

…

Should one military power start developing systems capable of selecting targets and operating autonomously without direct human control, it would start an arms race similar to the one for the atom bomb, the authors argue. Unlike nuclear weapons, however, AI requires no specific hard-to-create materials and will be difficult to monitor.

“The endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow. The key question for humanity today is whether to start a global AI arms race or to prevent it from starting,” said the authors.

Time to have all the arguments we’ve had for years now about the ethics of drone warfare, with a new and exciting layer of sci-fi conjecture.

Assuming the nations and corporations of the world all comply, is there any argument against this ban?

If the world can’t agree on an outright ban, what does the new arms race look like?

If AI weapons do move forward, what regulations or limitations would you put in place to prevent disaster — or even apocalypse?